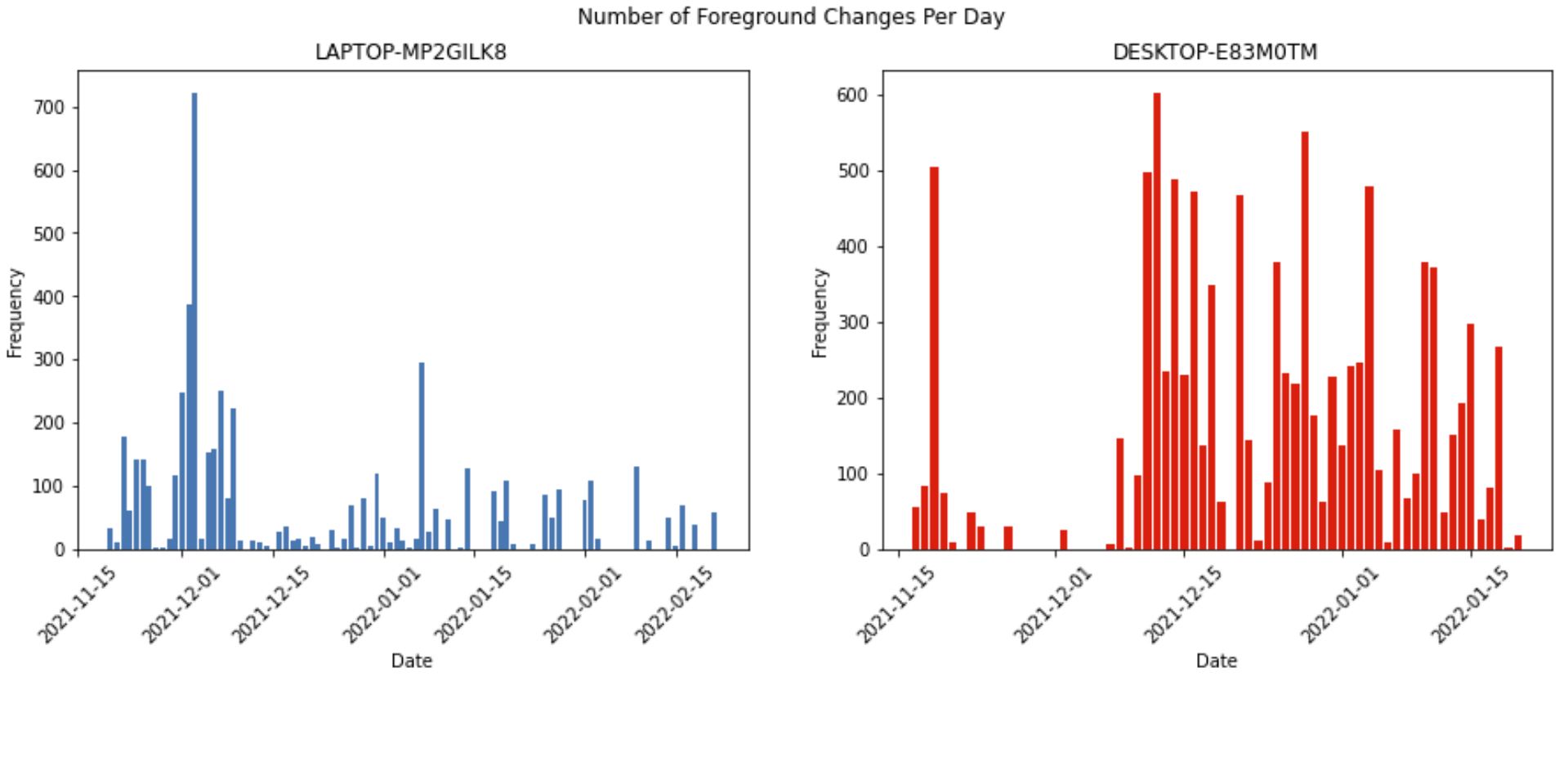

We addressed two predictive tasks using the data collected. For our first task, we built models to predict the next application used based on previous user activity. For our second task, we built a model to predict the duration the next application would be used based on previous user activity.

Task 1: Next-App Prediction

- Approach 1: Hidden Markov Model

- Approach 2: Long Short-Term Memory

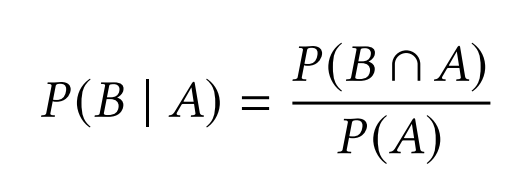

The Hidden Markov Model (HMM) is a particular machine learning approach that ingests sequences of time-related events in order to predict future events. For our purposes, we manually implemented a First Order HMM (adopting a similar model class as the package, scikit-learn) which looks at a single previous application in order to predict the single next application. A HMM’s “order” refers to the amount of previous applications, or “look-back”, the HMM will use in order to generate a single next prediction, and therefore, our First Order HMM will use a single previous event to predict a single next event. This is equivalent to simply computing conditional probabilities of each unique 2 sequence event; for a given previous event A, the probability of the next event B is determined by:

These probabilistic values are calculated upon model training and stored into a posterior matrix instance variable. These probabilities are then recalled upon prediction. Given an input foreground of notepad++.exe, for example, the probability of explorer.exe being the next foreground is approximately 43%. One special implementation of our First Order HMM is the custom predict function which has an optional parameter of n_foregrounds which specifies the number of foregrounds to return for a single input, based on the foregrounds with the highest probabilities. A predict call with n_foregrounds = 3 will return the list [‘explorer.exe’, ‘mmc.exe’, ‘chrome.exe’]. Accuracy for a single observation is therefore calculated by determining whether or not the true foreground application exists within the list of predicted foreground applications. Using n_foregrounds = 3 for our testing dataset, we achieve a final accuracy of 70.05%.

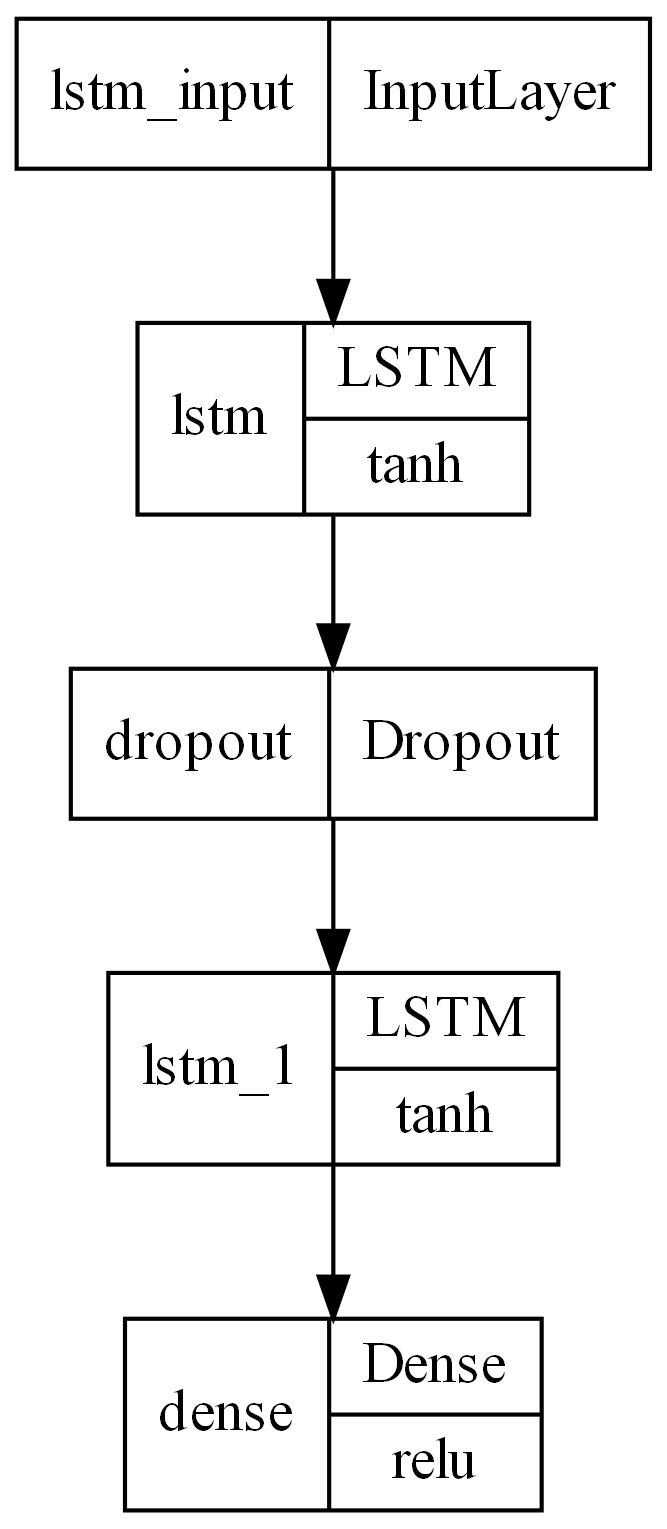

Though our Hidden Markov Model implementation was fairly successful, we wanted to approach the task from a different angle, so we built a Long Short-Term Memory Recurrent Neural Network (LSTM RNN) model as well. Because of the higher complexity of LSTMs compared to HMMs, we developed our model using Keras, a deep learning API built on Python that allows for a streamlined pipeline of models.

Similarly to our HMM, our LSTM returned a list of the top four most likely applications to follow the current foreground application, ordered in levels of confidence. Accuracy was calculated with the same function (where a single observation was labeled accurate if the true future foreground application appeared in the list of predictions). Our LSTM model had a test accuracy of 68.60%, a value similar to our HMM accuracy

Task 2: Foreground App Duration

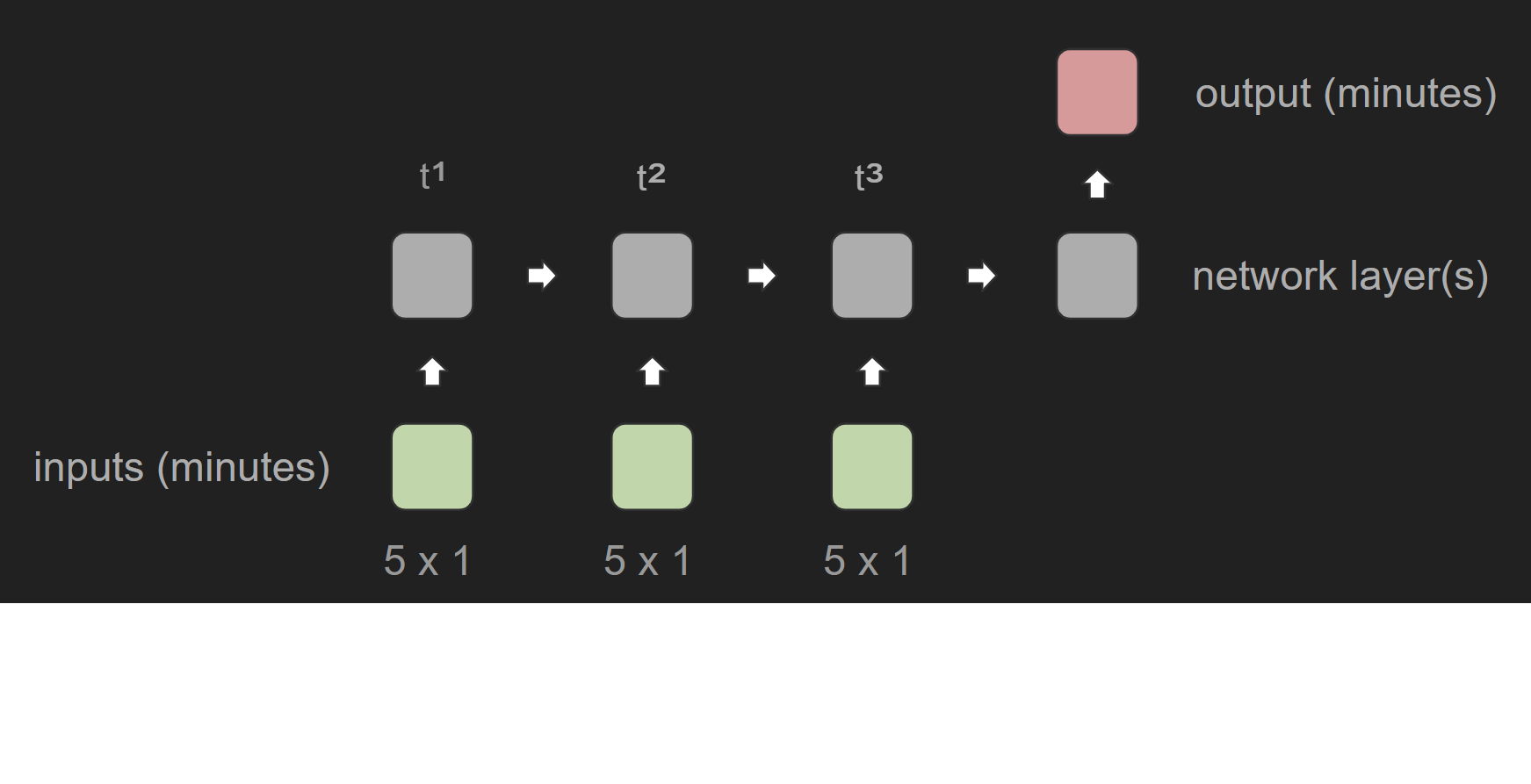

Because of the flexibility of the LSTM model, we decided to focus on a LSTM solution for task 2, predicting next application durations, as well. Our task 1 LSTM model used a “look-back” value of one previous foreground application in order to predict one future foreground application, where a “look-back” is defined as the number of previous events a single input will use in order to generate the next output. In order to raise accuracy, our task 2 LSTM used a look-back value of five.

In other words, the model uses the previous five data points to predict the next. The task 2 model architecture is similar to our task 1 model, with the four layers in the same order. However, we used a MAE loss function, ReLU activation function, and 25 epochs instead. After training, our model made predictions with a mean absolute error of 0.74 minutes. With our testing dataset’s mean foreground duration of 1.73 minutes, on average our model predicted foreground durations accurate within approximately 44 seconds.